Are we ready for AI?

January 2nd this year marks the centenary of Isaac Asimov, one of the most popular science writers and publishers in history. Asimov is the author of "I Robot", one of the most important work in science fiction. The book is a set of short stories about smart robots.

The third of the stories, titled "Reason", is about Cutie, a robot prototype so advanced that it has self-learning capabilities. The problem arises when this causes it to become self-conscious, and suddenly begins to think about existential issues. The humans who live with it at the station are trying to tell the truth: Cutie is an advanced robot prototype that was designed to replace them. But Cutie not only does not accept human explanations, it ends up identifying them as inferior beings. Humans live with great concern until they realize that Cutie not only wants to do them no harm, but also perfectly carries out the mission for which it was originally scheduled: to take care of the ship.

As machines grow smarter, how should they be treated and viewed in society? Once machines can act in a similar way to humans, how should they be governed? Should we consider machines as humans or objects? What level of responsibility do we attribute to the devices themselves, and at what level of the people who supposedly control them? What about the companies that created them?

In March 2018, an autonomous car killed a pedestrian and there was a lot of controversy with it. People were outraged that a machine had killed a human. To whom should we demand responsibility? To the driver? To Uber? To the government for not regulating properly?

Despite the evidence that autonomous vehicles have much lower mortality rates than human-driven vehicles, the responsibility issue and control are worrying. Asimov's lesson is that we must implement laws and regulations now to protect ourselves in the future. And it all gets complicated because the debate quickly brings us to another less scientific dimension: ethics.

As we begin to see autonomous cars on our streets, some organizations have already opened the debate. But as we can see, the ethics of how an autonomous vehicle behaves in certain situations is more complicated than it seems. Should the car hit a wall, killing passengers to save pedestrians? Or vice versa? What if one group were seniors and the other teenagers, would that affect the behavior?

By replacing the human driver with the artificial intelligence controller, automakers have a great moral and legal responsibility that no one has ever had before. The human driver was usually responsible for the accidents caused by their decisions or actions; now that responsibility is shifting to the artificial intelligence controller that is capable of saving and taking lives at the same time.

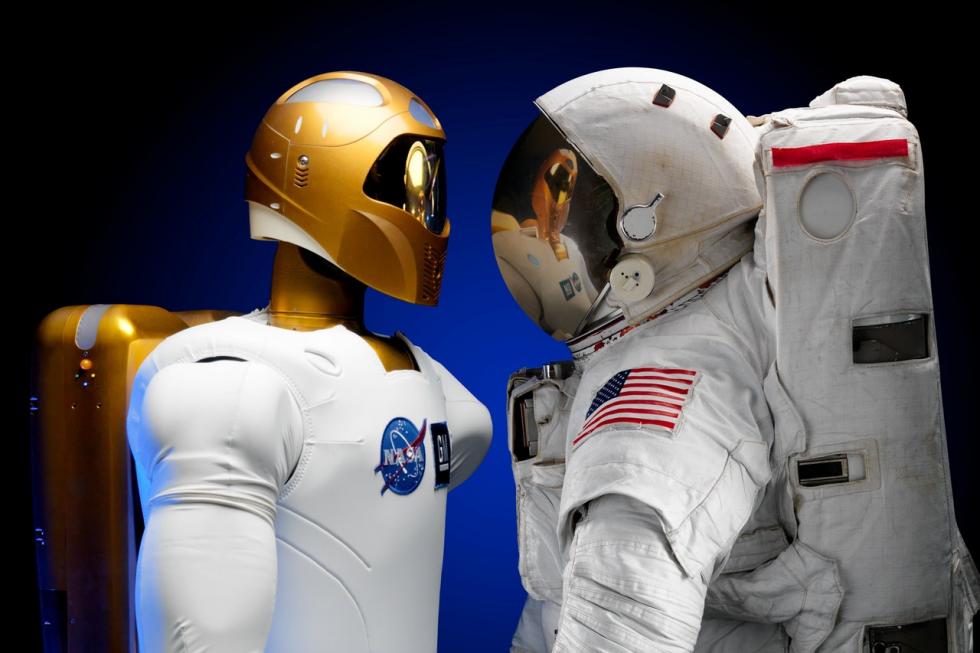

In Asimov's story, the robot was designed to replace humans at a space station. Indeed, one of the main challenges of AI is technological unemployment, that is, those originating by the introduction of new technologies. In the upcoming years, we are likely to witness significant changes in the labor market: jobs will become obsolete, new ones will arise, industries will be radically transforming, employment patterns and relationships will be redefined. At the same time, technology will drive the formation of new roles, positions or even scientific specializations, while allowing people to free up time from monotonous and low-value work, to engage in more creative activities.

Technology unemployment is already a growing concern in business and political circles. As an example, one of the candidates for the Democratic primaries in the US, Andrew Yang, has a leading measure of paying $ 1,000 a month to every American citizen over the age of 18, according to latest news from The New York Times. Yang argues that his experience working in Silicon Valley has given him insights into how automation could affect the economy in the years to come.

Being prepared for AI means much more than learning to coexist with machines or learning how to program them. Beyond that, it means preparing the legal, regulatory and ethical frameworks for what to come. It means, seizing the opportunity provided by AI to improve our lives. That is why initiatives such as the European Union's white paper for AI or the recently announced AI strategy for Catalonia are certainly good news. Undoubtedly, countries that prioritize technological development will be better prepared, and this will allow them to create more quality jobs and improve the well-being of their citizens.